I’m watching AI with great interest and trepidation. Mostly, I don’t know know to think about it.

I have a few foundational beliefs.

- The technology as it already exists has enormous disruptive potential, and we’re very early in seeing its effects. Even if it stopped changing today, people and organizations will be finding new ways to do things for years. I expect people to be displaced from work that was previously considered “safe” or “stable”, and for the changes to be painful and hard to absorb for individuals.

- Certain proxies for thought or motivation or effort no longer mean what they used to signal. Things that used to be scarce are abundant. So we need to develop new heuristics to assess some of the things we still care about that we used those proxies to identify. Cover letters and resumes are a good example. They’ve always embedded implicit and invisible attributes like cultural capital, diligence and interest in conforming to business expectations, aptitude and enthusiasm for certain educational and corporate settings, and persuasive ability. For some employers, those traits mattered. They’ll need to find new ways to assess these. Hiring is fully broken right now because signals that used to be meaningful are useless. A half-decent LLM can make everyone use the words to suggest they’ve got those things. New signals and norms haven’t emerged. (The old standby of networking and personal recommendation is doing even more work in the meantime)

- I’m still mostly a techno-optimist. For all the flaws and shortcomings I see in the system, I also respect capitalism and the exchange of money for goods and services as an engine for trade and innovation and the solving of problems that has enormous creative potential. Phyl Terry said something on Lenny’s Podcast that resonated with me. The process of creating new products and services is creative disruption. New industries and products rise to meet needs, and old ones disappear. It might be socially beneficial in the long run, but it can be very painful and destructive to those who are displaced. Social supports and a safety net should accompany capitalism for that reason.

- It’s my responsibility as a coach and a citizen to try to understand this technology better; what it’s good for and where it’s misleading or counterproductive. I ask just about everyone in my life how they’re using it, and I’m experimenting myself. The environmental impact makes me unsettled about this. I don’t know how to reconcile these two strong feelings. For now my compromise is to make a donation to the Nature Conservancy in double the amount I spend on AI tools.

- I still believe a few things. Humans will want and need to solve problems alongside other humans. Critical thinking, likeability, and discernment will continue to matter.

- The phrase “better than the best available human,” is one I consider a lot. “Better” is doing a lot of work there, and so is “best available.” I think about shame as a barrier for many people who are seeking help. There’s a risk inherent in showing your problems to another person — especially a person who you admire or respect. You don’t want to waste their time. You don’t want to expose yourself as weird or broken or struggling. Talking to an LLM doesn’t carry any risk. That means there’s a lower barrier to engaging with it. So I think people will seek it out earlier than they might look for help from a human expert, and for a different nature of problem. Whether and when this is likely to be fruitful is not immediately obvious.

- Some struggles are worth having. I want to write because I want to push myself to clarify my thoughts and opinions — to myself, first of all, and for the benefit of those I am hoping to help. I lift weights BECAUSE it’s hard, and because doing the hard thing is good for me. Avoiding difficulty or inconvenience is always tempting. I’m trying to act with discernment about when to give in to the impulse and when to resist it. One way I do that is by explicitly prompting AI to ask me at least 3 questions before providing me with an answer. The eagerness of AI to solve things on my behalf feels very dangerous to me, and that’s a small mitigation strategy that forces me to keep thinking about what I really want from the interaction, and the premise of my question.

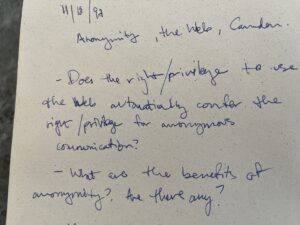

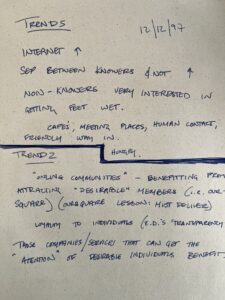

- I remember thinking a lot about the Internet in the early days. Here are some questions I was pondering in 1997 — what makes an online community desirable? What are the benefits of anonymity, and when is it harmful? What do we make of the separation between those who know/adopt the technology and those who haven’t yet? Those are big questions. I think they’re still worth chewing on. When I feel impatient to know what AI will mean, it’s helpful for me to remember that I’ve lived through another big disruption, which has left some things unrecognizable and others just about intact.

- Whenever I get confused or disheartened, my mental method is to pull back. What was true about humans when we were living in small hunter-gatherer tribes? What will be true when we are in bubbles on Mars? What will we want and need from one another? How can we build those skills and find outlets for the things we like to do, even as the world is changing?

- My other method of making sense of confusing things is looking for the smartest people I can find, especially those who see the world very differently than me, and listen and watch them. I’d value your thinking about AI, how you’re using it, what you love and fear, and whose thinking has influenced you the most.